The Illusion of Building Your Own LLM: Why Renting Intelligence is the Smartest Strategy

Don't build a 'Data Center' in the AI Era. Learn from the Cloud Revolution.

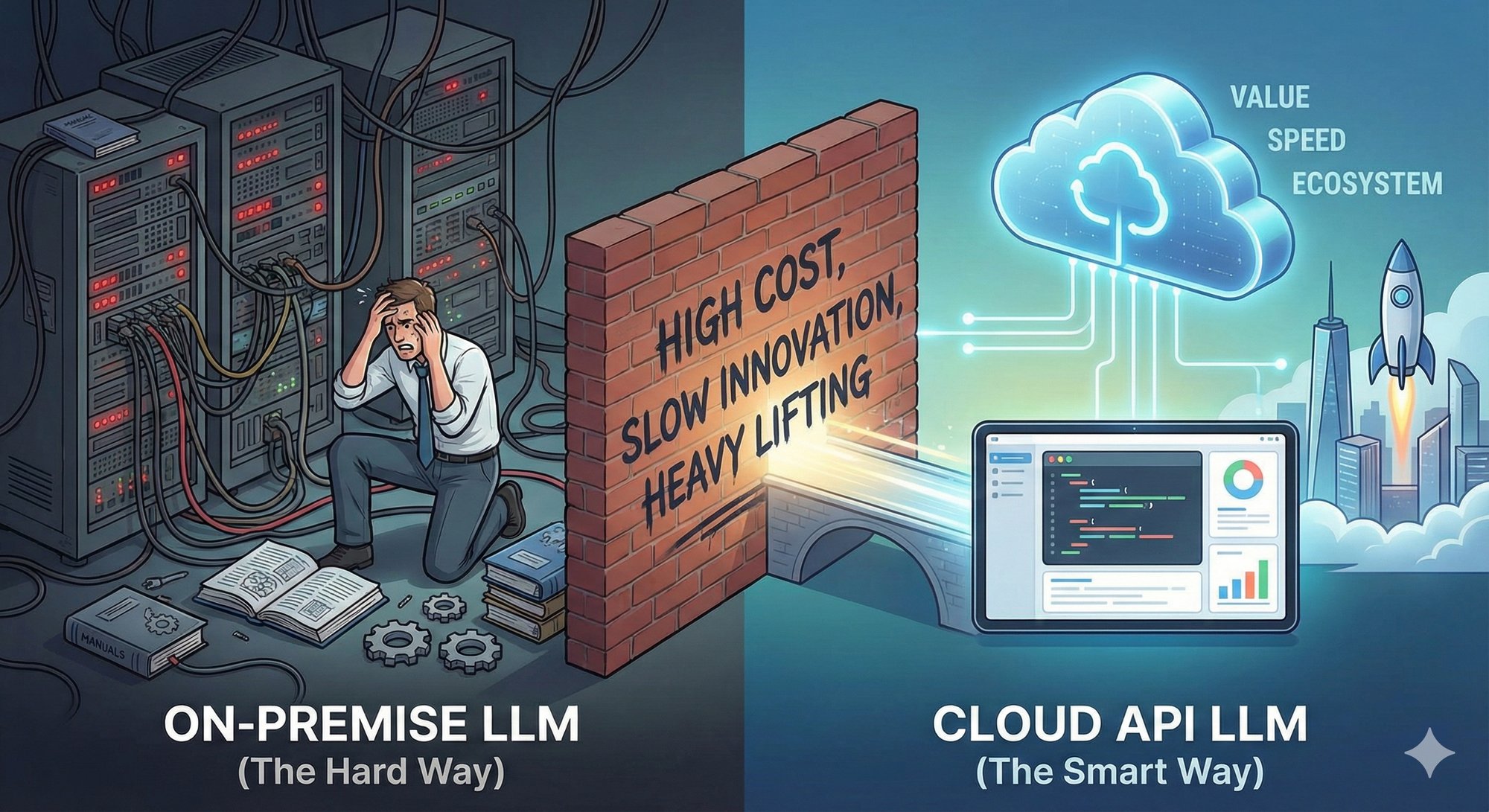

I often hear this from corporate executives and tech leaders: "We want to build our own on-premise LLM to protect our data and optimize for our specific needs."

To put it bluntly: Stop right there. Unless you operate under extremely rigid regulatory environments—like those requiring a Banking License where data strictly cannot leave the premises—building your own LLM is an irrational choice in terms of cost, efficiency, and technical debt.

We went through this exact cycle a decade ago. When AWS and Google Cloud emerged, many companies insisted on maintaining their own server rooms citing "security." Eventually, they all migrated to the cloud for better security and scalability. The LLM market is following the exact same trajectory.

1. The Unwinnable Race Against Innovation Speed

Imagine you spend six months training and optimizing your own model. By the time you deploy it, OpenAI or Google will have already released the next generation of models that are smarter, faster, and cheaper. The moment you choose to build in-house, you enter a "performance race" against big tech companies. This is a battle you cannot win. By using APIs, however, you can instantly upgrade to the latest SOTA (State-of-the-Art) performance just by changing a single line of code (the Model ID). The smartest way to keep up with innovation is not to 'own' it, but to 'access' it.

2. Tip of the Iceberg: The Model is Just the Beginning

Building the LLM is not the end. Modern AI applications require much more than just text generation.

- RAG (Retrieval-Augmented Generation): Building vector databases to ground answers in your internal documents.

- Web Search: Integrating real-time search to reduce hallucinations and provide up-to-date info.

- Function Calling: Enabling the AI to interact with other software and APIs.

Commercial API providers offer these features as an integrated ecosystem. If you build in-house, you are burdened with developing, debugging, and maintaining all this ancillary infrastructure from scratch. This is "Undifferentiated Heavy Lifting"—work that adds no unique value to your core business.

3. Cost Asymmetry: CapEx vs. OpEx

Building in-house traps you in massive Fixed Costs (CapEx). You need to buy or rent expensive GPU clusters and hire a team of high-salaried AI engineers to maintain them. The meters run even when your users are asleep. In contrast, APIs operate on Variable Costs (OpEx). You only pay for what you use. With the rapid commoditization of AI, token prices are dropping drastically. For most enterprise traffic volumes, the cost of using an API is overwhelmingly lower than running and cooling your own servers 24/7.

4. The Data Security Myth

The concern that "I don't want my data used to train the AI" is valid, but the solution of building in-house is often misguided. Current Enterprise-grade LLM APIs (via AWS, Azure, Google Cloud) legally guarantee Zero Data Retention—meaning your data is never used for model training. Think about it: Is it safer to manage security patches on your own servers and risk vulnerabilities, or to use Private Endpoints on top of the security infrastructure guarded by the world's best security experts at big tech firms?

5. Conclusion: Focus on Value, Not Infrastructure

Your customers don't care what model you use. They only care about the value that model provides to them. Don't waste time reinventing the wheel. Rent the best "brains" (LLMs) available, connect them to your unique data, and focus on building the service your customers want. The winner in the AI era is not the one who 'owns the model,' but the one who 'utilizes the model best.'